How the AI Gold Rush Is Influencing Data Center Design Trends

May 06, 2025 | Q&A with Joy Hughes and Ryan Cook

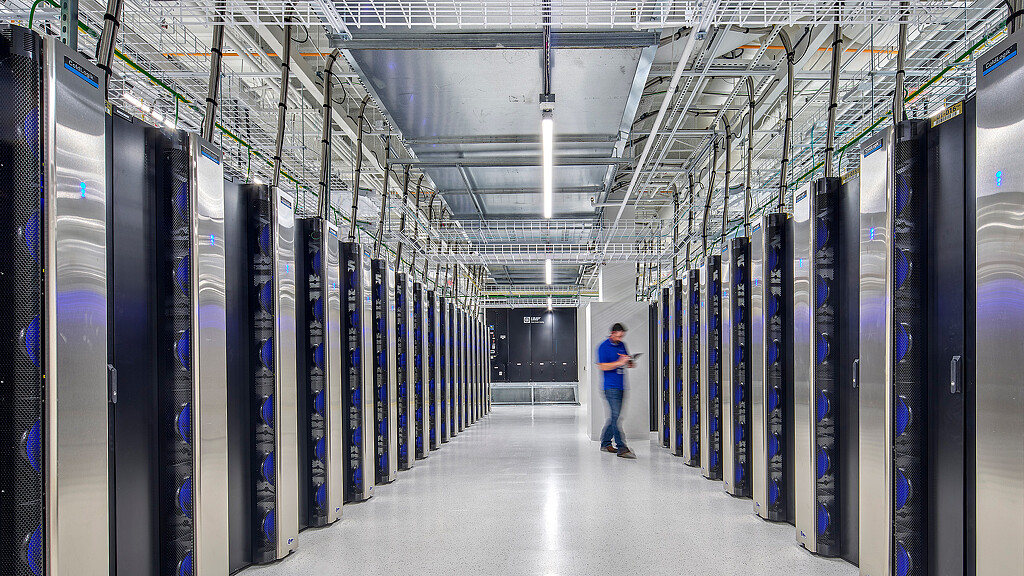

The demand for AI and digital infrastructure is booming, leading to a record $57 billion in global data center investment in 2024, according to Colliers. As the surge for AI and cloud services continues unabated, while land and energy supplies remain scarce, many data center operators and developers can’t build or redevelop data centers equipped to handle this enormous amount of data and power fast enough to keep pace.

We sat down with Joy Hughes, Global Accounts Director, and Ryan Cook, Critical Facilities leader for Gensler’s Southwest region, to discuss how the AI revolution is poised to fuel data center investment and growth, and other trends influencing the shape, speed, and operations of data center design.

How is the AI boom fueling the growth of data centers?

Joy Hughes: Especially for our hyperscale data center clients, everything is speed-to-market right now. AI is driving the capacity, but our challenge is how fast we can get these facilities up and running. When our clients come to us, they already have service agreements with end users set up with promised dates. They have to be move-in ready, so everything is moving very quickly, and it can come down to how the buildings are built, and what they’re made of. Everything is about speed, speed, speed.

Ryan Cook: You’ve got hyperscalers (like Amazon, Google, or Microsoft) that are building for their own demand, and then developers that are building for the hyperscalers that have contracts with them. This AI spike is driving demand, and nobody knows how big it will be or what the real capacity will be. Everybody’s just trying to plan for it.

Joy: AI really is a catalyst, and we’re seeing larger and larger campuses to house AI data centers. Whereas you used to see four buildings on the campus, now you’re seeing 10-20.

How is the surging demand for AI and advanced technologies impacting data center design and operations?

Joy: Liquid cooling is becoming standard. Especially with NVIDIA, Amazon, and others coming out with new chips, air just doesn’t cut it anymore to cool them. They need constant cooling. So, the liquid is actually piped through them. And everything has to keep moving at a certain speed to keep these chips cool.

This is changing the mechanical design, which then changes the building design of data center facilities to a point where there’s a lot of retrofitting. Some of these colocation providers have held onto their sites for years, so there are many retrofits going back into some of these sites that were built out for air cooling, and they’re now installing liquid cooling equipment.

Ryan: It takes a lot more power to run an AI server, because they’re learning. As you ramp up on power density, you also need to ramp up on cooling, because there’s so much more heat that needs to be exhausted. The standard cabinet for a co-location facility is around four KW power density per cabinet, and it can go up to eight or 12 KW. Hyperscale data centers are a bit higher. A lot of the cabinets that we’re seeing to support AI and liquid cooling are going up to 100+ KW.

Liquid cooling has become a big topic of conversation, along with higher-density cabinets to accommodate the level of power that AI requires. Many hyperscalers are transitioning out of air-cooled facilities into liquid-cooled facilities, and they need the infrastructure to support this transition.

It’s not just the equipment, but also piping, because you need to support a lot of water through these pipes, and so you’re literally piping to every single rack. We call that direct-to-chip. Liquid cooling has become the biggest cooling method that clients are introducing in their data centers. There are other methods, like immersion cooling, but not many companies are doing that.

Data centers are expected to account for nearly 9% of U.S. electricity consumption by 2030. Are there any design considerations to make these facilities more sustainable and efficient?

Joy: Data centers are the most efficient buildings in the world, and they’re efficient by nature, because any ounce of additional electricity is extra money, and operators don’t want to spend any more than they have to. So, when we talk about data centers being power hungry, it’s not because the building is inefficient. It’s actually capacity-driven. That is the absolute baseline power needed to run the necessary capacity.

We are always looking at more sustainable measures in the building design itself. Our clients have huge sustainability goals, especially on the building side. We ensure all of our projects comply with the Gensler Sustainability Standards (GPS) and that we’re not using any red-listed materials for interior finishes. We’re also looking at lower-carbon concrete and steel. For example, we’re working with Microsoft on a data center project in Virginia that uses cross-laminated timber (CLT) while cutting back on steel and concrete.

Ryan: In previous years, many of the mechanical systems were mixing, like they do in an office setting. But there’s been a shift in the last decade or so, where now everything’s contained through a process we call hot aisle or cold aisle containment. Now, all the air that cold and hot air is separated, so these big buildings become highly efficient. When you’re trying to pack all of these individual components into a contained facility, everything has to have a very specific reason why it’s there for the entire system to work.

Joy: I think utilities are where some of the sustainability measures fall short. Our clients are looking at ways to use less water or power, but not every jurisdiction has industrial water loops available for reuse, and not all of them have converted to wind, solar, or other sustainable energy sources.

Nuclear energy is probably the cleanest source outside of wind, and it can provide a lot more power. The challenge is getting energy to these data centers because you lose electricity along the wire, so providers are looking for sources that are closer to existing and new infrastructure. We do a lot of hydropower in the Midwest and states like Oregon. So, there is a move to be more sustainable there, but much of it falls on the utility companies.

How are data center operators looking to solutions like microgrids, small modular reactors, and other means of on-site energy generation to address these challenges?

Ryan: Most operators are talking about nuclear and small modular reactors (SMRs), potentially on-site for power generation in the future. Reliability is a big concern for data centers, and if they have more control over the power directly to the data center, they’ll pursue that, especially because relying on the grid for power is a bottleneck for many clients in developing sites.

Joy: We’re not seeing a lot of this right now, because many people don’t want an SMR sitting close to their home. And there’s probably going to be some pushback on on-site power generation, since the utilities are going to lose money. I think there’s going to have to be a lot of work with legislation and jurisdictions, and safety is going to have to be vetted before we really start to see SMRs be adopted at scale. So, that will be an interesting trend to watch over the next few years, and will be very exciting.

Where are you seeing major hotspots for data center growth?

Ryan: In many top-tier markets, like Virginia or Silicon Valley, it’s challenging to get the power, land, and approval. So, there’s more pushback in those areas, and you’re seeing data center clients look elsewhere where they can be incentivized based on different tax exemptions. So, you’ll see data centers pop up in those locations.

Joy: Atlanta is the next big hotbed outside of Virginia. But there’s a lot of work outside the U.S., in Mexico, Canada, Malaysia, and India. Per capita, the U.S. is the biggest hotspot for data center development, but we are starting to see that growth as the rest of the world requires the capacity as well.

What other trends are you seeing in data center design?

Joy: One of the big things that we’re seeing is that a higher level of design is being required on the exterior of these projects, so that they blend in as much as possible with their surroundings. We try to work with the jurisdictions and have some dialogue with the community about how these facilities are designed and built to ensure that we are sensitive to their concerns.

Ryan: Aesthetics is one thing in data center design, but where our skill set shines is in creating really efficient buildings. There’s so much coordination between mechanical and electrical, and we make sure these two main drivers are in alignment and that they all work when it comes to construction. And we often find opportunities to create efficiencies within these buildings that translate to our clients’ cost savings or speed-to-market.

For media inquiries, email .